Archive

Sep 26, 2023

Introducing the LangChain Integration

Introducing the LangChain Integration

Today, Gradient is excited to announce the integration of its cutting-edge LLM fine-tuning platform with the LangChain framework.

The integration represents the convergence of two of the AI industry’s most innovative infrastructure tools, empowering developers to achieve more efficiency, accuracy and creativity in AI-driven applications.

About Gradient and LangChain

The Gradient Developer Platform allows users to customize and enhance LLMs with domain-specific data, enabling high precision outputs tailored for individual use cases. When building on Gradient, users have full control of the data they use and ownership of the model they create.

LangChain stands out as the pioneering framework for sequencing tasks in AI applications. It allows for the chaining together of AI processes, ensuring seamless and optimized application performance.

What this Integration Means

The Gradient and LangChain integration enables users to use their Gradient models for desired tasks in a LangChain workflow. Within the LangChain framework, users can call the Gradient API to run inference on their own LLMs built using the Gradient platform.

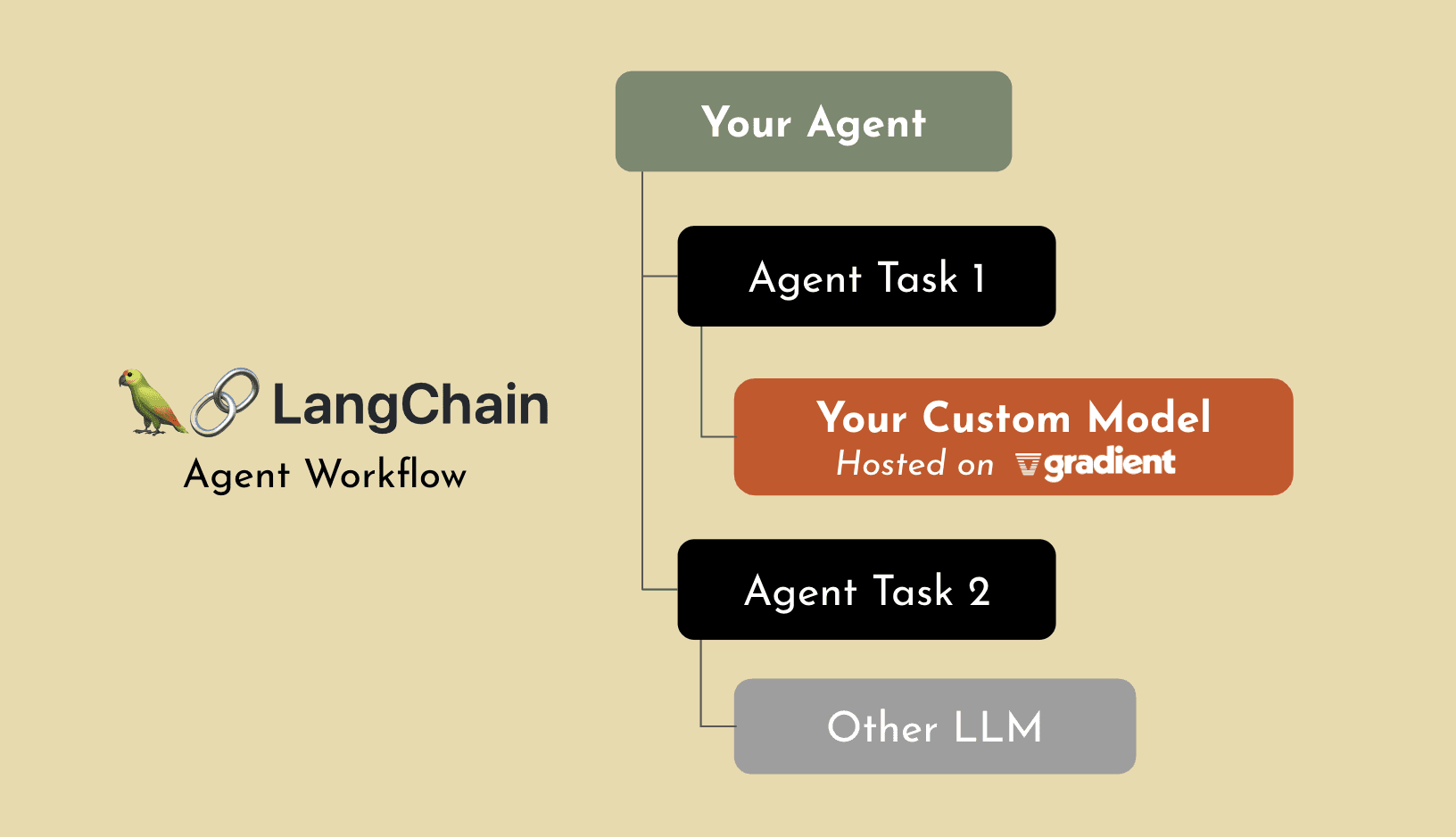

For example, let’s say a user is building an agent that can complete various tasks.

Task 1 may require more domain-specific expertise and/or reference private data, so they want this task to be completed using a private, custom LLM that has been fine-tuned on the Gradient platform. Meanwhile Task 2 only require general knowledge and involves no private data. Using LangChain, the user can designate which model is best suited to each task.

With the integration of LangChain, Gradient users will now have the power to:

Precision-Tailor AI Applications: Fine-tune multiple LLMs to specific data sets tailored for different domains and/or tasks, and then select the best model to use for each component of an application, enhancing accuracy and adaptability.

Integrate Workflows Seamlessly: Integrate fine-tuned models directly into complex AI workflows, drastically reducing the time between model training and deployment.

Optimize Resource Allocation: Efficiently allocate resources, ensuring the most cost-effective models are used for each task.

Collaborate More Effectively: Teams can work together more efficiently, by working on different components of an application and bringing them together in an integrated workflow.

How to Use Gradient + LangChain

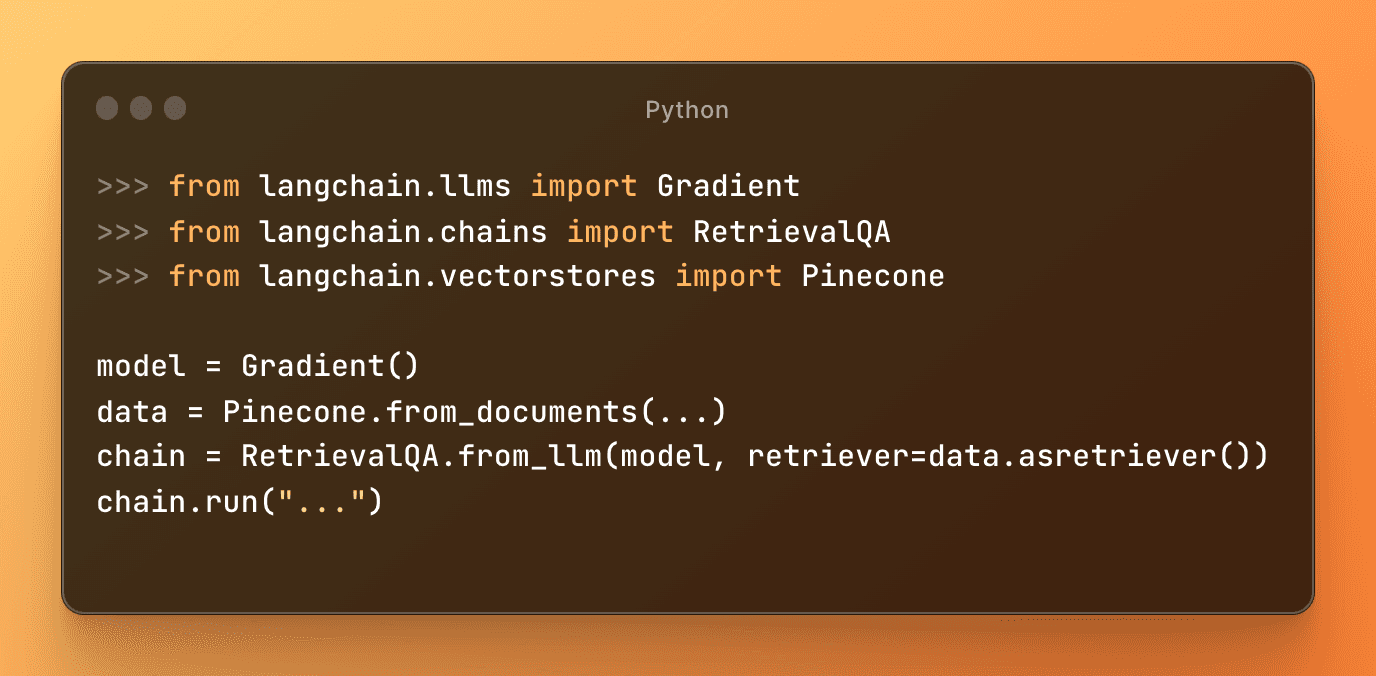

You can use a Gradient-hosted model in the LangChain framework with a few simple lines of code.

See the developer documentation for more details on the Gradient + LangChain integration.

Try Gradient or Join Us

Gradient is now available to independent developers and companies globally. Sign up and get started with $10 in free credits.

Following the free credits, Gradient comes with transparent, token-based pricing. Companies that require tighter security and governance can contact us to learn more and to see a demo.

Developers and organizations only beginning to see the potential of LLMs in various applications, and we’re excited to help them achieve this easier and faster. For anyone looking to join us in this mission, we’re hiring!

Share