Archive

Oct 11, 2023

Introducing the LlamaIndex Integration

Introducing the LlamaIndex Integration

Today, Gradient is delighted to share our integration with LlamaIndex, enabling users to build effective RAG systems with Gradient fine-tuned LLMs.

This partnership brings together two leading tools in the AI sector, enabling developers to enhance efficiency, precision, and innovation in AI-focused applications.

About the Gradient Developer Platform

The Gradient Developer Platform allows users to customize and enhance LLMs with domain-specific data, enabling high precision outputs tailored for individual use cases. When building on Gradient, users have full control of the data they use and ownership of the model they create.

About the LlamaIndex Framework

LlamaIndex stands out as the pioneering framework for Retrieval Augmented Generation (RAG) in AI applications. It allows users to retrieve relevant information from a vector database and produce LLM completions based on this additional context.

What this Integration Means

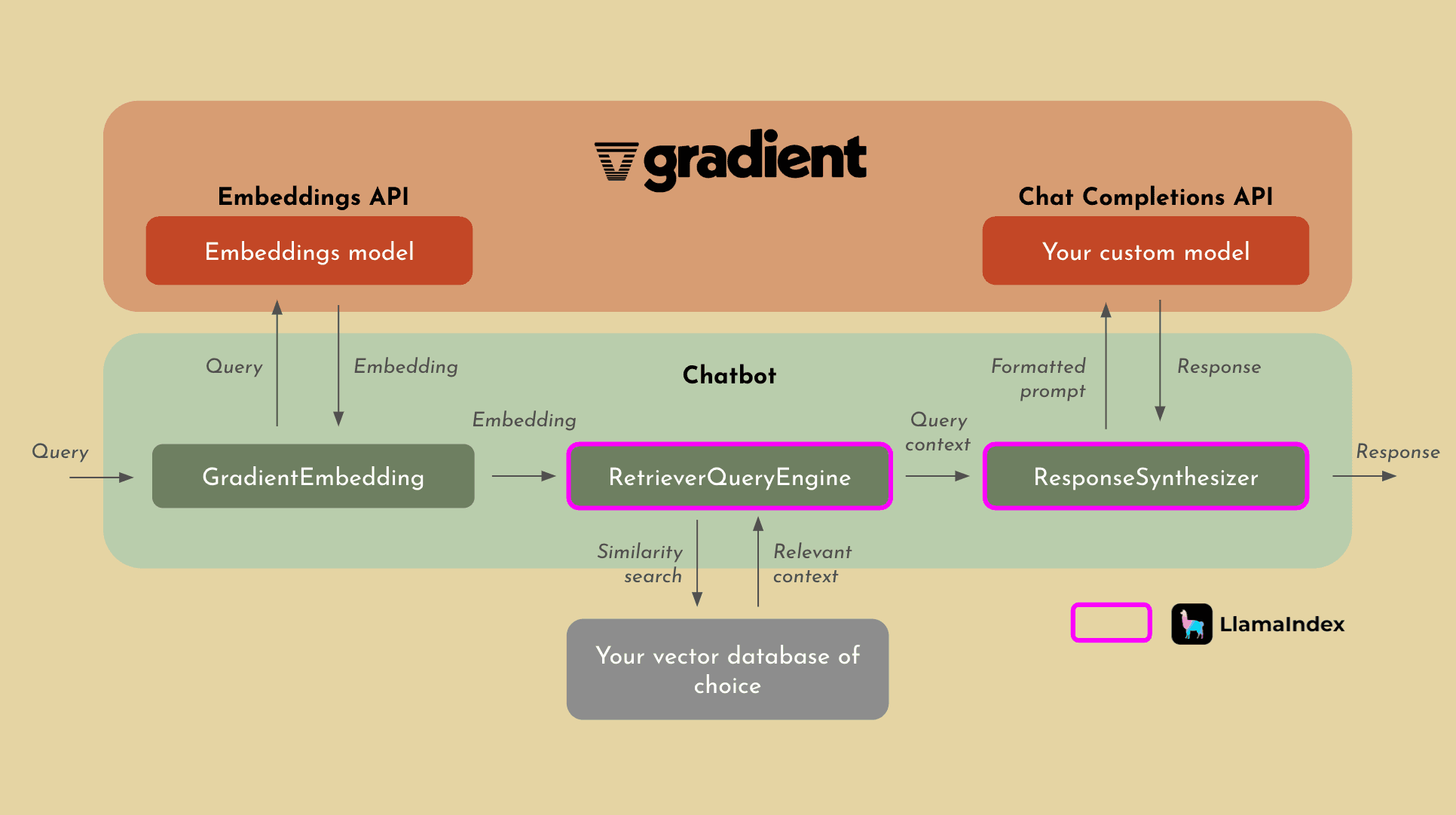

The Gradient and LlamaIndex integration enables users to effectively implement RAG on top of their private, custom models in the Gradient platform. With the LlamaIndex framework, users can generate enhanced completions based on additional information retrieved from an indexed knowledge database.

Let’s break down how an example of how RAG works with Gradient and LlamaIndex, step by step:

The user submits a query

GradientEmbedding takes in the query and calls the Gradient Embeddings API (via LangChain integration) to convert the query to an embedding

RetrieverQueryEngine performs a similarity search against the data points in your vector database to find relevant information (via LlamaIndex integration)

ResponseSynthesizer reformats the query and relevant information into a single prompt that is sent to the Gradient Chat Completions API (via LlamaIndex integration)

The response is returned to the user

See the developer documentation for more details on the Gradient + LlamaIndex integration.

Try Gradient or Join Us

Gradient is now available to independent developers and companies globally. Sign up and get started with $5 in free credits.

Following the free credits, Gradient comes with transparent, token-based pricing. Companies that require tighter security and governance can contact us to learn more and to see a demo.

Developers and organizations are only beginning to see the potential of LLMs in various applications, and we’re excited to help them achieve this easier and faster. For anyone looking to join us in this mission, we’re hiring!

Share